Running Ollama on NixOS as a backend for for Nextcloud Assistant

So Nextcloud got the ability awhile back to make use of LLMs for various things, like speech to text, text summerization and image generation. Sounded like a handy addon, and it turns out I had the hardware needed to self host it, kinda. Turns out there are some limitations, but we’ll get to that.

First thing I needed to do was figure out how to run the LLM and a quick search turned up a couple of different options. The most obvious one for me to try was Ollama as it is in the Nix repos. Installing it was pretty simple, just add the following to the configuration.nix.

services = {

ollama = {

enable = true;

acceleration = "cuda";

openFirewall = true;

loadModels = [ "llama3.1:8b" ];

host = "100.84.238.20";

};

};

enable = true;is pretty straight forward. It just installs ollama.acceleration = "cuda";tells the Ollama service to use my NVIDIA GPU to speed things up. It was incredibly painful to use without that acceleration.openFirewall = true;does exactly what it sounds like. It opens the firewall to enable access the LLM.loadModels = [ "llama3.1:8b"];tells the module what model you want to run. You can select any model from HuggingFace assuming your system can run it. If notnixos-rebuildwill fail.hosttells the module what interface it should be listening to for requests. It defaults to localhost.- Additionally you can specify what port you want ollama to use which would look like

port = 11434;default port is 11434 and I had no reason to change it.

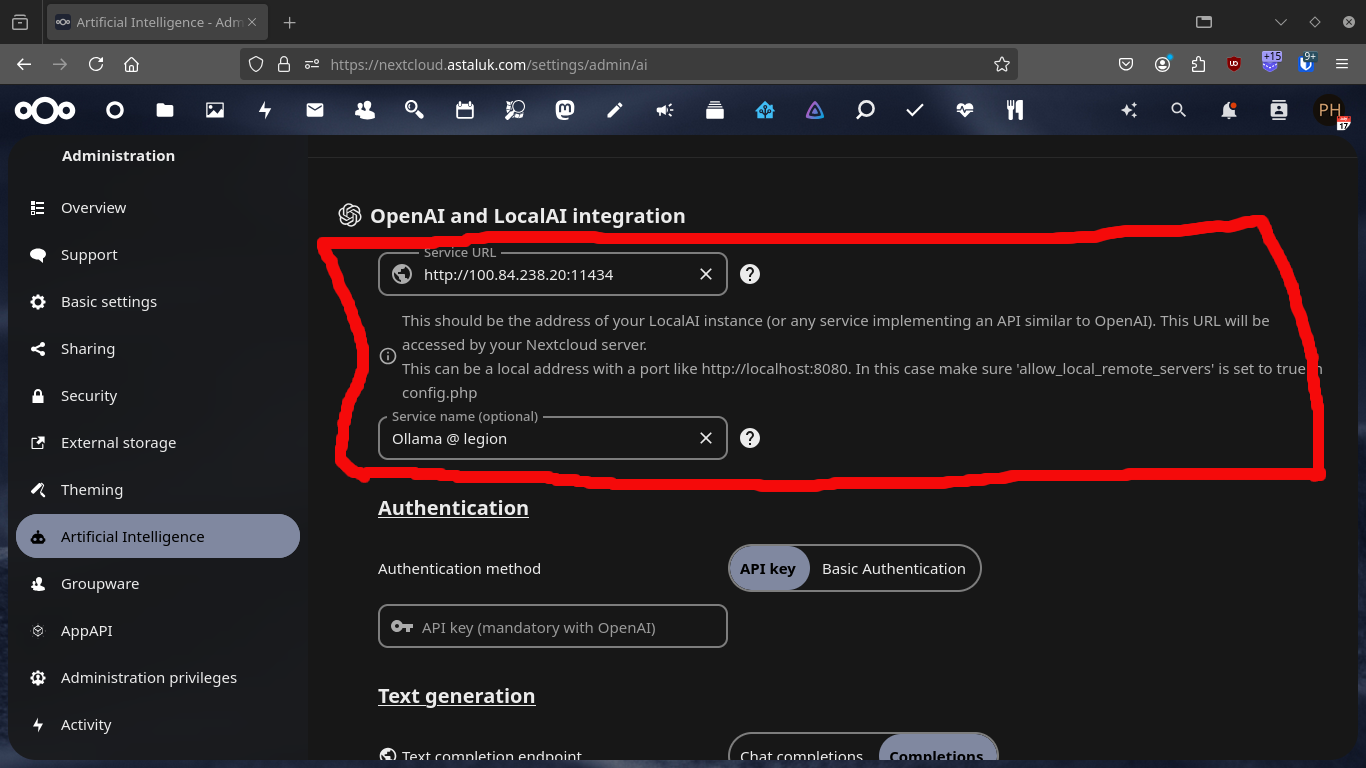

Now we do a rebuild and we should be ready to setup the Nextcloud side. Login to Nextcloud as your admin user and go to the Administration page and select “Artifical Intelligence” and scroll down a-ways.

In the service URL box we’ll put the Ollama host IP address and the port number and in the second box we can put a name for the service. You may need to do some tweeking to the other settings and unfortunetly Ollama does not support image generation but things should be good for text generation now.